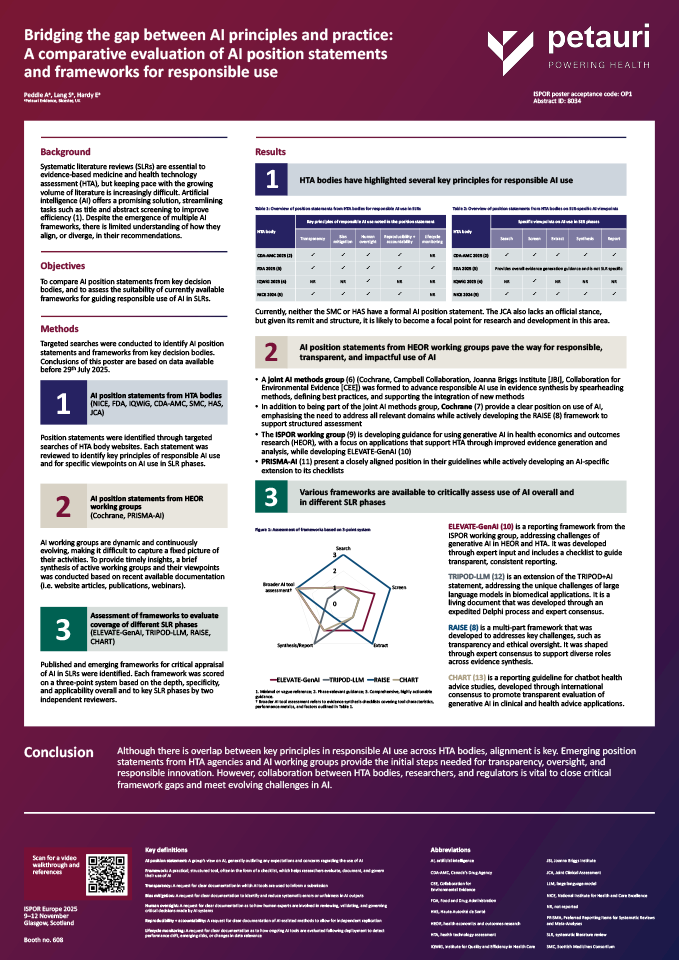

Bridging the gap between AI principles and practice: A comparative evaluation of AI position statements and frameworks for responsible use

The Petauri Evidence team will be presenting this research at ISPOR Europe in Glasgow, in Poster Session 1 on Monday 10th November.

View referencesIn this short video, Amelia Peddle (Analyst – Systematic Review, Petauri Evidence) introduces the research:

Poster introduction:

Systematic literature reviews (SLRs) are essential to evidence-based medicine and health technology assessment (HTA), but keeping pace with the growing volume of literature is increasingly difficult. Artificial intelligence (AI) offers a promising solution, streamlining tasks such as title and abstract screening to improve efficiency (1). Despite the emergence of multiple AI frameworks, there is limited understanding of how they align, or diverge, in their recommendations.

Research objectives:

To compare AI position statements from key decision bodies, and to assess the suitability of currently available frameworks for guiding responsible use of AI in SLRs.

Please complete the form below to download the full poster:

Back to ISPOR Europe Virtual Booth

To help save the planet and to save you from carrying a tonne of literature around the exhibition hall, all our resources are available virtually.

Poster references:

- Chan GCK, et al. A comprehensive systematic review dataset is a rich resource for training and evaluation of AI systems for title and abstract screening. 2025;16(2):308-22.

- CDA-AMC. Canada’s Drug Agency Position Statement on the Use of Artificial Intelligence in the Generation and Reporting of Evidence. 2024. Available from: https://www.cda-amc.ca/sites/default/files/MG%20Methods/Position_Statement_

AI_Renumbered.pdf. Accessed: September 2025. - FDA. Considerations for the Use of Artificial Intelligence (AI) to Support Regulatory Decision-Making for Drug and Biological Products. 2025. Available from: https://www.fda.gov/regulatory-information/search-fda-guidance-documents/considerations-use-artificial-intelligence-support-regulatory-decision-making-drug-and-biological. Accessed: September 2025.

- IQWIG. Could large language models and/or AI-based automation tools assist the screening process?. 2023. Available from: https://training.cochrane.org/sites/training.cochrane.org/files/public

/uploads/Could large language models and or AI-based automation tools assist the screening process.pdf. Accessed: September 2025. - NICE. Use of AI in evidence generation: NICE position statement. 2024. Available from: https://www.nice.org.uk/position-statements/use-of-ai-in-evidence-generation-nice-position-statement. Accessed: September 2025.

- Campbell Collaboration. New AI Methods Group to spearhead adoption across four leading evidence synthesis organizations. Available from: https://www.campbellcollaboration.org/2025/03/

new-ai-methods-group-to-spearhead-adoption-across-four-leading-evidence-synthesis-organizations/. Accessed: September 2025. - Cochrane. Recommendations and guidance on responsible AI in evidence synthesis. Available from: https://www.cochrane.org/

events/recommendations-and-guidance-responsible-ai-evidence-synthesis#Part%203. Accessed: September 2025. - Thomas J, et al. RAISE 3 – selecting and using. 2025. Available from: https://osf.io/5xjpk. Accessed: September 2025.

- Fleurence RL, et al. Generative AI for Health Technology Assessment: Opportunities, Challenges, and Policy Considerations. arxiv. 2024.

- Fleurence RL, et al. ELEVATE-GenAI: Reporting Guidelines for the Use of Large Language Models in Health Economics and Outcomes Research: an ISPOR Working Group on Generative AI Report. 2024. Available from: https://arxiv.org/pdf/2501.12394. Accessed: September 2025.

- Cacciamani GE, et al. PRISMA AI Reporting Guidelines for Systematic Reviews and Meta-Analyses on AI in Healthcare. Nat Med. 2023;29(1):14-5.

- Gallifant J, et al. The TRIPOD-LLM reporting guideline for studies using large language models. Nat Med. 2025;31(1):60-9.

- CHART Collaborative. Reporting guidelines for chatbot health advice studies: explanation and elaboration for the Chatbot Assessment Reporting Tool (CHART). BMJ. 2025;390:e083305.